Why data quality architecture matters for enterprises

Ensuring robust data quality is crucial for any enterprise. This article explores key considerations for designing an effective data quality architecture, including storage formats, data schemas, and handling large data volumes. Learn best practices to future-proof your data management strategy.

Ensuring data quality is a critical challenge for enterprises, directly impacting decision-making and operational efficiency. A well-designed data quality architecture is essential to manage this complexity effectively. This article explores the key considerations for creating a data quality system, including where the data is stored, the schema, and the data volume. By understanding these factors deeply, enterprises can set up systems that meet immediate needs and scale with growing data demands.

Let’s dive into each of these topics to provide best practices and insights for managing data quality.

Principal elements of data quality architecture implementation

Where the data is stored: Is the data in a relational database, or is it in open data formats like CSV, JSON, Parquet, Iceberg, or Delta? Different storage formats have unique challenges and capabilities when it comes to data quality management. Understanding the storage type helps in choosing the right tools and strategies for effective data quality monitoring.

Schema of the data: Is it organized in flat tables or as semi-structured data with many nested arrays? The structure of your data can significantly impact how you manage and ensure its quality. Flat tables are straightforward, but semi-structured data with nested arrays requires more advanced tools and techniques to analyze and maintain.

Volume of the data: The scale of the data should be a crucial consideration when planning your data quality (DQ) roadmap. It’s not just about detecting immediate issues but also about future-proofing and ensuring that remediation can be done at scale. Large volumes of data can overwhelm traditional data quality tools, making it essential to plan for scalable solutions.

Choosing the right data quality architecture involves understanding these factors deeply. It’s not just about immediate needs but about setting up a system that can grow and adapt with your data. Below, we will dive deeper into each of these topics to explore their implications and the best practices for managing them.

Data storage

Data quality requires extensive calculations to determine metrics like completeness, uniqueness, and frequencies. This often involves numerous queries per table. Be cautious about pushing all these calculations down to a database or storage layer, as it can result in unbearable costs. If your data is in files, additional complexity is needed in the architecture, such as a query engine (e.g., Presto) to compute those metrics.

With a simple approach of just pushing the queries down, you might be forced into sampling as the only viable option to mitigate costs. However, sampling for data quality or observability is not ideal, as it defeats the purpose of achieving full visibility into the data, creating blind spots and adding randomness to the key signals tracked by observability.

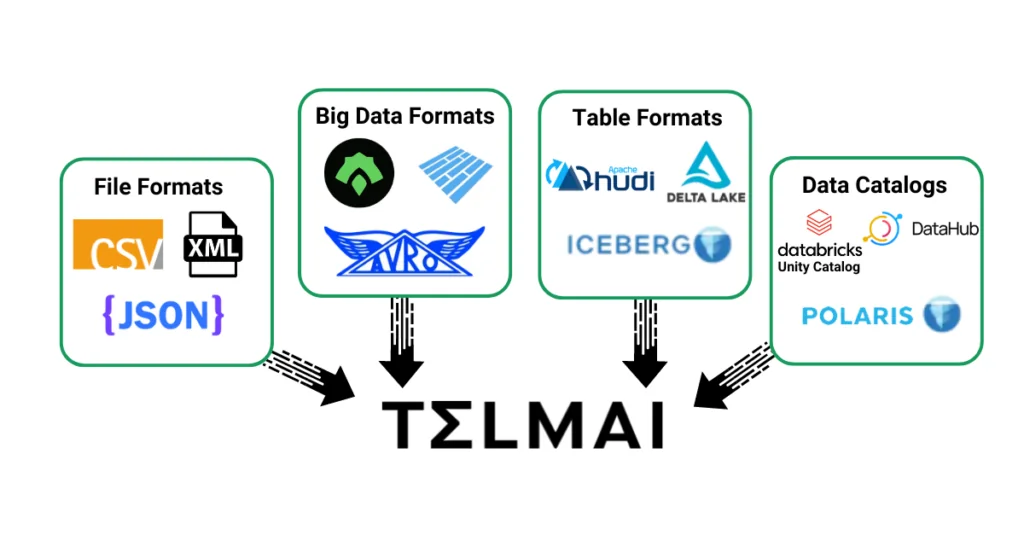

Another aspect to consider is the ability to monitor data anywhere, whether in files of various formats, all kinds of databases, including NoSQL.

Data often travels across these different systems, and quality needs to be checked at various points. Having a single system operating on the same definitions across all these systems is critical for comprehensive coverage and a successful and timely rollout of the DQ/DO implementations.

Schema of the data

When evaluating Data Quality and observability solutions, it’s important to ensure that semi-structured or JSON data formats are covered. Sometimes, these are limited to a few attributes, but often, entire records consist of hundreds of attributes organized in JSON structures and arrays. Most databases offer some support for such data, but this support is designed to make it compatible with SQL (developed for relational models) and expand data from specific attributes. However, for DQ and DO, we need to analyze all attributes, often hundreds. In this case, native SQL functions are no longer satisfactory and will either require multiple queries per attribute or result in undesired outcomes like cross products, which might overload and crash the queries, producing erroneous results.

In summary, if you plan to analyze semi-structured data (and it’s almost guaranteed you will encounter it), you will either have to exclude such data from analysis (not ideal) or look for solutions that can support it natively without the risk of crashing your database by causing a multiple cross product on a billion-row table.

Volume of the data

Historically, one of the most common approaches to addressing the challenges of large volumes of data was sampling. There is a lot of research into various sampling strategies and workarounds with varying levels of success across many domains (including infrastructure monitoring). However, when thinking about DQ and DO, it’s important to ensure nothing is missed, as problems can occur anywhere.

There are basically two main approaches to this:

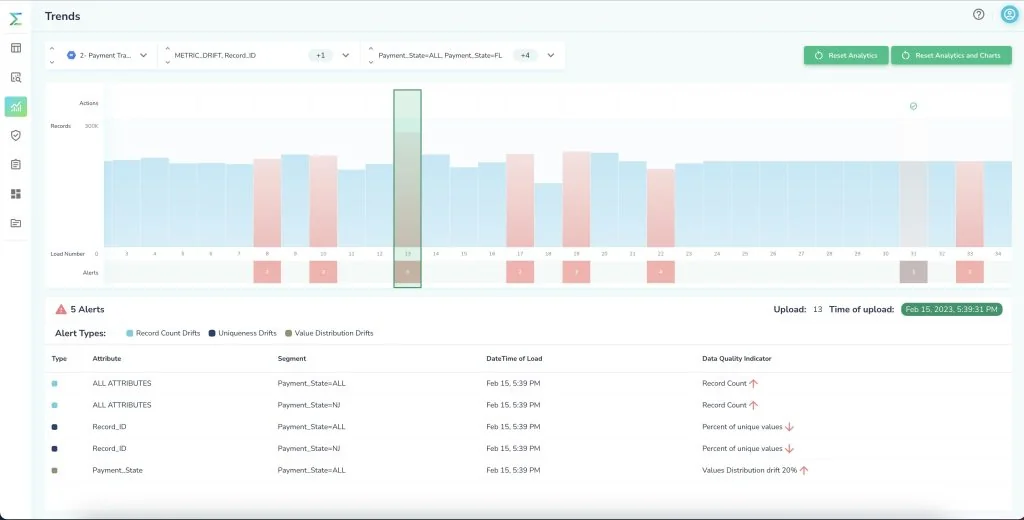

- Sampling: Do a sample, possibly detect some variation or drift in the data, then step outside the tool to manually analyze the data and find what went wrong. This approach obviously won’t work for tracking custom metrics across a large number of dimensions and is very limited in what it can detect. This is a good first step in DO, especially if done in-house.

- Full Volume Analysis: Don’t sample; instead, perform calculations and time-series analysis on the entire volume of data. This approach will detect blind spots and ensure nothing is missed.

However, it requires more sophisticated algorithms and architecture to avoid becoming prohibitively expensive. This is a crucial consideration when selecting the right tool.

Another aspect to consider is the total cost of ownership. Tools that push down queries to the database will significantly increase DB costs, adding to your cloud bill. Tools that are not elastic compute will either require aggressive sampling (to fit on a small cluster) or will inefficiently burn resources by idling large clusters when no data scans are needed.

Conclusion

Effective data quality architecture ensures comprehensive monitoring and analysis across various data formats, schemas, and volumes. This avoids blind spots and inefficiencies, ensuring reliable data quality and observability for enterprises. Understanding and addressing the key considerations of data storage, schema, and volume are essential steps in building a robust and scalable data quality solution.

Passionate about data quality? Get expert insights and guides delivered straight to your inbox – click here to subscribe to our newsletter now.

- On this page

See what’s possible with Telmai

Request a demo to see the full power of Telmai’s data observability tool for yourself.