7 Ways data observability for databricks is better with Telmai

Discover the power of our partnership with Databricks, a collaboration driven by customer demand and desired capabilities. With most businesses operating on hybrid data architectures, Databricks unifies complex data types into a single lake house architecture. Telmai enhances this with seven key capabilities, providing a scalable data observability platform for continuous reliability and efficient detection of data issues across diverse data volumes.

This week, we announced our Databricks partnership. A few months in making, this partnership has been the result of our shared customers demand and the capabilities that they have asked for.

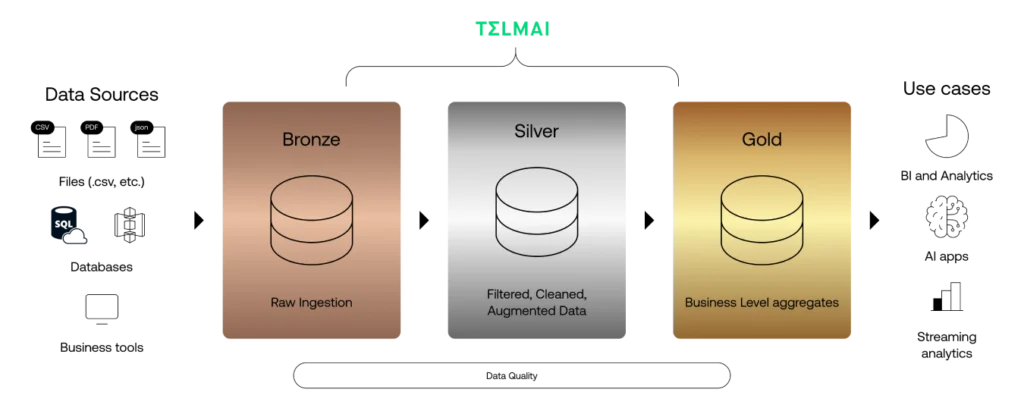

Today, most businesses run on a hybrid data architecture, with data spread across structured, semi-structured, and event-streaming sources. Databricks has built a powerful platform that combines these complex data types into a single lake house architecture. This environment requires a scalable data observability platform that can detect data issues across large volumes of diverse data at marginal cost.

Here are 7 capabilities that Telmai brings to the table, ensuring continuous reliability of Databricks data lake houses.

1. Monitoring batch and streaming pipelines

Enterprises rely on the Databricks Lakehouse platform to bring analytics, advanced ML models, and data products into production. This, in turn, drives the importance of data quality as adopting these applications relies on good data.

Telmai’s Data Observability provides the tooling for continuously monitoring the health of data lake houses and delta live tables (DLT). Telmai automatically and proactively detects, investigates, and monitors the quality of batch workloads and streaming data pipelines.

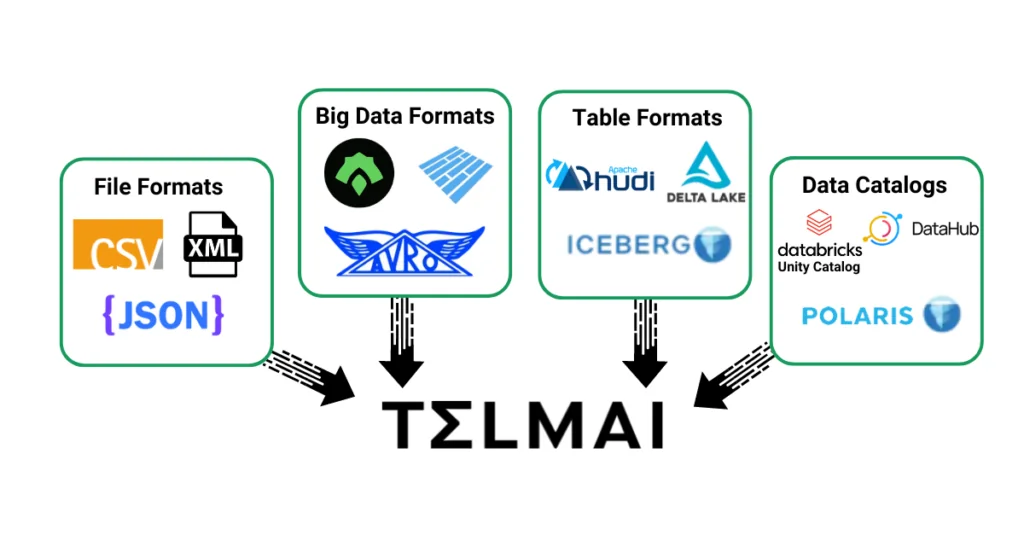

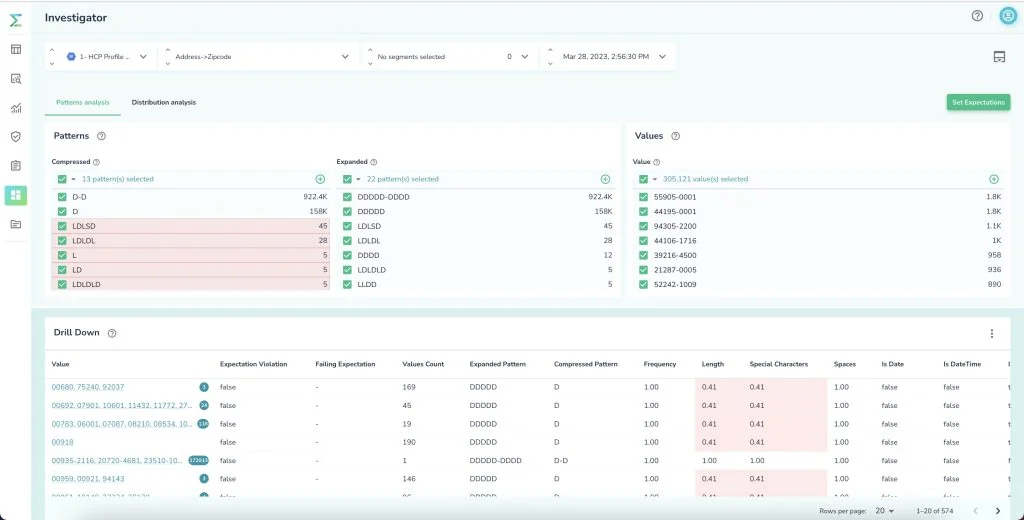

2. Investigating semi-structured and structured data alike

As issues arise, data teams need tooling to investigate and find the root cause of their data quality. With the flexibility of Databricks lake house architecture to house both structured and semi-structured data, any monitoring and observability layer needs to be able to detect and investigate data quality issues regardless of the type and format of the data. However, semi-structured data has nested attributes and multi-value fields, aka arrays, and requires flattening and calculating aggregations one attribute at a time. The accumulated overhead on each query with such an approach quickly becomes unmanageable.

Telmai, on the other hand, is designed from the ground up to support very large semi-structured schemas such as JSON (ndjson), parquet, and data warehouse tables with JSON files. Data teams can use Telmai to investigate the root cause of all their data.

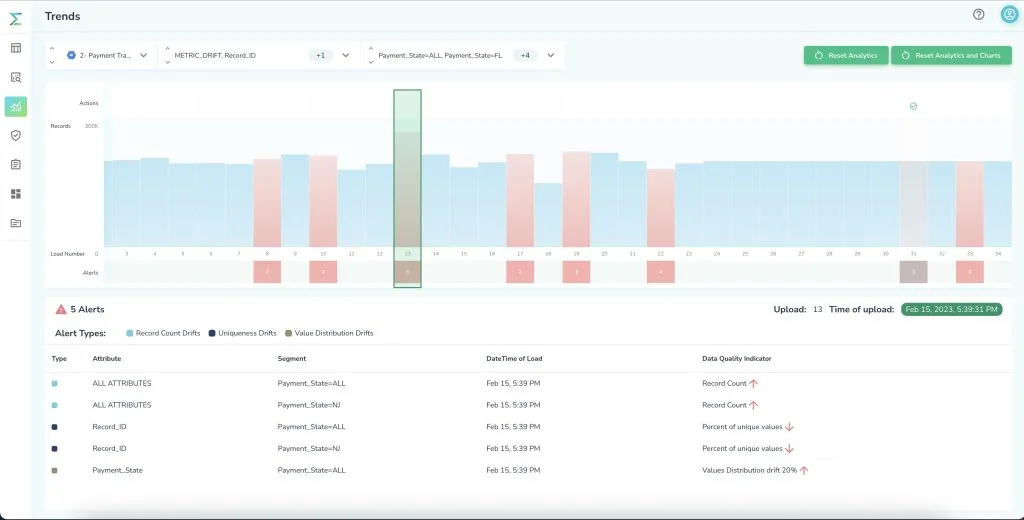

3. Anomaly detection and predictions

Machine learning and time series analysis of observed data can help data teams gain a predictable outlook on the data quality issues that may arise and empower them to set notifications and alerts where future data would drift from historical ranges or expected values. For example, if historically the % completeness in zip code is above 95%, any future incomplete data that brings this KPI down could signal further attention to the root cause analysis of the issue.

Telmai uses time series analysis to learn about values in your data and create a baseline for normal behavior. With that baseline established, Telmai establishes thresholds for every data metric monitored. This automates anomaly detection. When the data crosses a certain threshold or falls outside historical patterns, alerts, and notifications uncover changes in the data and key KPIs.

4. Pipeline control using a circuit breaker

In many cases, identifying data quality issues is the first step in the process. Fully operationalizing data quality monitoring into the pipeline is often the ultimate goal. To fix data quality issues, data teams implement remediation pipelines that need to run and process at the right time and for the right data validation rule.

Telmai can programmatically be integrated into Databricks data pipelines. Using Telmai’s API, data teams can invoke remediation workflows based on the output of data quality monitoring. Repair actions can be taken based on the alert type and details provided through Telmai’s APIs. For example, incomplete data alerts can be flagged for upstream teams to fix, while duplicate data can go through a de-duplication workflow.

5. Cataloging high-quality data assets

After automating checks and balances on the data housed inside the lake house, data health metrics are published into the Unity Catalog so catalog users get additional insights about their data sets like Data Quality KPIs, open issues, certified data labels, and more.

Telmai provides continuous data quality checks and creates a series of data quality KPIs such as accuracy, completeness, and freshness. These KPIs can label data sets in the Unity Catalog for data consumers to easily discover and utilize high-quality data in their analytics, ML/AI, and data-sharing initiatives.

6. No-code, low-code UI for collaboration

While Databrick’s primary users remain highly technical, Databricks Unity Catalog has opened access, discovery, and usage of data quality assets to a broader set of users.

With Telmai, data teams can take this further and invite their business counterparts and SMEs to collaborate on setting data quality rules and expectations and finding the root cause of issues before downstream business impact. Telmai provides over 40 out-of-the-box data quality metrics and has an intuitive, visual UI to make data quality accessible to those who actually have the business context for it.

7. Data profiling and analysis for migrating to Databricks

Perhaps this capability is the first of many for those migrating to Databricks data lake architecture. These migrations need easy and automated profiling and auditing tools to understand data structures and content pre-migration and avoid duplications, data loss, or inconsistencies post-migration.

Telmai’s low-code no-code Data Observability product enables profiling and understanding of data quality issues before migration and testing and validation after migration to ensure a well-designed and high-quality data lake environment.

To learn more and see Telmai in action, request a demo with us here.

Passionate about data quality? Get expert insights and guides delivered straight to your inbox – click here to subscribe to our newsletter now.

- On this page

See what’s possible with Telmai

Request a demo to see the full power of Telmai’s data observability tool for yourself.